Adaptive Streaming in the Field

Adaptive streaming sounds easy enough: Produce multiple files with different quality-related configurations, start with a stream that matches the viewer's bandwidth and playback platform, then adapt to changing throughput conditions and the varying CPU load on the viewer's platform. As with all things streaming, however, the devil is in the details-how many streams, what resolutions, what keyframe interval, should you encode using VBR or CBR; what audio parameters should you use (and should they change per stream); and what's the most efficient way to address those pesky iOS devices?

To answer these questions (and many others) we contacted several organizations using different technologies and asked detailed questions about all this and more. Producers who participated included MTV, Turner Broadcasting, NBC (through Microsoft), Indiana University (IU), Harvard University, and Deutsche Welle, and I want to thank them up front for sharing their experiences. These companies use a variety of technologies, including Adobe Flash, Microsoft Silverlight, and Apple HTTP Live Streaming. This supports my goal of presenting a technology-neutral collection of encoding and implementation details.

How Many Streams?

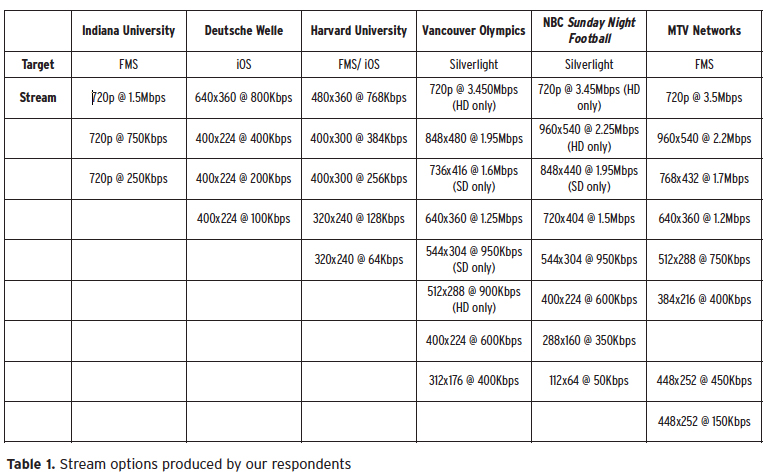

As you would expect, I saw significant variability in the number of streams and their configurations. Table 1 (below) contains a list of configurations used by our respondents. Note that this table doesn't contain all configurations for all activities. For example, Harvard handles live and on-demand events differently. Rather, it shows the alternative with the most configuration options. I didn't include Turner Broadcasting in the table because although it shared data rate and other information, Turner didn't provide resolution, frame rate, and other information referred to later.

At Indiana University, I spoke with Matthew Gunkel, multimedia specialist at the Kelley School of Business, who advised that all streams are produced at 29.97 fps. Deutsche Welle's configurations generally follow the direction given by Apple in its Technical Note (that should be required reading for all considering or implementing HTTP Live Streaming. I spoke with multimedia developer Jan Petzold, who shared that the first two streams were produced at full frame rate (25 fps), the 200Kbps stream was produced at 12.5 fps, and the 100Kbps stream was produced at 8 fps.

At Harvard, Larry Bouthillier, media technology architect for the Office of the University CIO, explained that for live events, which are distributed both via the Flash Media Server (FMS) and HTTP Live Streaming, he uses three streams, 800x600@1152Kbps, 400x300@564Kbps, and 320x240@132Kbps, with the highest quality stream FMS only and the other two using both technologies. Harvard encodes its on-demand videos at full frame rate except for streams less than 150Kbps, which are encoded at 15 fps.

For the Vancouver Olympics and NBC's Sunday Night Football, I spoke with Alex Zambelli, the Microsoft media technology evangelist who architected the live Smooth Streaming workflows for the two productions. Zambelli related that all streams from the Vancouver Olympics were produced at full frame rate (29.97) while Sunday Night Football produces all streams except the last at 29.97, while the 112x64 resolution stream, which is used only for thumbnail previews, is produced at 15 fps.

In both instances, NBC distributed both HD source and SD source video. For the Olympics, there were two HD-only stream configurations and two SD-only configurations, including an 848x480 SD stream from an SD interlaced source. With Sunday Night Football, NBC produces two HD-only streams (720p and 960x540) on SD-only stream (848x480) and then uses all other configurations for both SD and HD.

At MTV Networks, I spoke with Glenn Goldstein, VP, media technology strategy. As Goldstein explained, MTV creates two groups of files for adaptive delivery, one for "broadband" clients, which includes desktop computers with high-speed connections, and one for "constrained" clients, which are devices or computers constrained by decode CPU or connectivity. MTV assigns viewers to a group upon connection and keeps them within that group throughout that connection. In Table 1, the top configurations are for the broadband group; the bottom two are for the constrained group.

Goldstein also advised that MTV Networks uses the native frame rate for all encodes; that encompasses 23.98, 24, 25, and 29.97 footage. Goldstein also related that MTV will inverse telecine movie footage to return to the original frame rate before encoding whenever necessary.

Note that Adobe supplies its configuration and encoding recommendations for Dynamic Streaming in "Dynamic Streaming on demand with Flash Media Server 3.5," by Abhinav Kapoor and "Video Encoding and Transcoding Recommendations for HTTP Dynamic Streaming on the Flash Platform," by Maxim Levkov 11. Microsoft's Ben Waggoner weighs in with similar information for Silverlight in "Expression Encoder 2 Service Pack 1; Intro and Multibitrate Encoding." I'll refer to all three articles and the Apple Technical Note where relevant

in this article.

Related Articles

The VP of emerging media for Turner Sports talks about the network's delivery of the PGA Championship online, as well as the benefits of HTTP streaming and the implications of HTML5 video

31 Dec 2010

Microsoft Expression Encoder 4 offers the ability to inexpensively adaptively distribute to Apple iDevices, and does so admirably

30 Dec 2010

With adaptive bitrate streaming, companies can post a video and let the technology sort out the rest. So which product is best for you?

31 Jul 2009

Companies and Suppliers Mentioned