How to Maximize Your Metadata

This article appears in the February/March 2012 issue of Streaming Media magazine, the annual Streaming Media Industry Sourcebook.

Metadata’s not the most exciting topic for Sourcebook readers, but it is one of the most important if you want to generate interest in your content.

Why go to the trouble of extracting metadata? Metadata creation and extraction are laborious, even with the slew of automated tools, primarily because the tools are susceptible to a number of false positives or become confused by elements within the video or audio tracks.

Still, there are key reasons to spend the time to create or extract metadata for your online video.

- Make particular clips easier to find. We often think of online video as a set of assets with tags, titles, and descriptions, but there are many other detailed metadata options that can help with enterprise asset management or production workflows— if the proper metadata is available.

- Provide new services for customers. The M word (monetization) is often used as justification for the pain accompanying video metadata extraction, but it turns out to be true. Thought Equity Motion, Inc. (TEM), a company that provides live and on-demand metadata creation and extraction for sports teams, found that its customers’ viewers spent significantly more time watching classic game content if detailed metadata was made available to search. The average engagement jumped from less than 20 seconds to several minutes in one case (see our Metadata Buyer’s Guide for the TEM example).

- Make archiving and retrieval of offline storage easier. Beyond postproduction workflows and consumer media consumption, metadata is also key for preservation-related aspects of digital file storage. Many files in an enterprise environment or post-production facility are stored offline, so the only information available to find these files is the metadata associated with the file and an occasional still image representing the video file.

- Bring in-file and external metadata into compliance with a standard format (XML) for use in preservation or monetization activities. If you’ve not heard of DASH, you will soon. This XML-based media presentation description (MPD) is the MPEG standards committee’s attempt to present certain types of metadata in a consistent way so that content encoded in one adaptive bitrate solution can be decoded by any DASH-compliant player.

Different Needs, Different Methods

There are three primary ways to extract or create metadata. Telestream, Inc., a company that provides end-to-end solutions for encoding and transcoding, published a white paper a few years ago with a concise three-step approach to metadata creation. The steps are straightforward:

- Extract metadata from closed captions and other embedded video information in a source file.

- Reuse existing metadata when it is present.

- Create annotations or tagging.

The first two steps sound less painful, but we’re going to cover all three approaches since manual creation of annotation or tagging can have significant monetization and asset management benefits. In reality, given the limited consistency of speech-to-text and visual pattern recognition, even those who rely on automated metadata extraction will find themselves falling back on manual metadata creation or corrections to automated metadata capture.

The first two steps sound less painful, but we’re going to cover all three approaches since manual creation of annotation or tagging can have significant monetization and asset management benefits. In reality, given the limited consistency of speech-to-text and visual pattern recognition, even those who rely on automated metadata extraction will find themselves falling back on manual metadata creation or corrections to automated metadata capture.

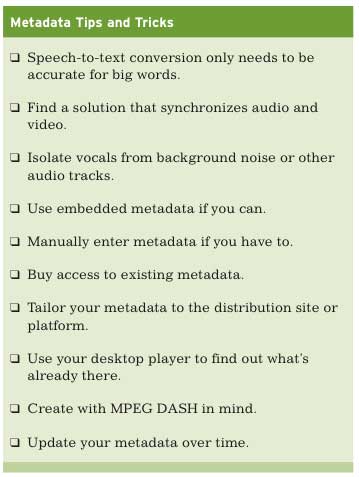

Here are a few simple tricks and hints to consider when doing metadata extraction:

1. Speech-to-text conversion only needs to be accurate for big words. For general speech, the hardest words for a speech-to-text conversion system to pick up are identifiers (e.g., her, his, an, the) and specific model or part numbers. If you try reading a model number to Siri on your iPhone 4S, you’ll see that the Siri has difficulty with understanding how you say NG1TZ1. Yet the big words, such as airplane or toaster, are easy to identify, and those are the words that will be used in searching and retrieving the video clips.

Conversely, a transcript can have detail, but you should make sure it’s accurate. For video sites such as YouTube, if you have a transcript, turn on the captions feature after uploading the transcript. According to YouTube, this can help your discoverability as it will provide more data points to index your video.

2. Find a solution that synchronizes a word or phrase with the appropriate spot in a video. It’s great to have keywords or metatags that describe an entire video clip, but if that clip is an hour long, most users will be very frustrated if they have to view the entire clip to find that one word or phrase. One of the best ways to handle this is through closed-captioned information, which is already embedded into many pieces of content; just make sure your metadata extraction system keeps the synchronization intact.

3. Noise matters. Isolate vocals as much as possible from background noise or from other tracks. Nothing kills the accuracy of a speech-to-text metadata extraction faster than background noise, whether it’s music, sound effects, or a badly placed microphone. From background conversations to a too-loud overhead public address system, the presence of additional voices confuses most speech-to-text systems, dropping their accuracy to less than 60%.

4. Use embedded metadata whenever possible. Telestream points out that some video formats, particularly those used for web streaming or editing, may already contain metadata such as descriptions of individual scenes. In some cases, it is possible to preserve and extract this information to facilitate searching. Yet not every piece of information will be preserved.

5. Don’t be afraid to manually enter information.

When it comes to facial recognition, a number of video-indexing tools do a decent job of capturing full-on shots (those where the subject appears on screen, alone, and facing the camera). When it comes to profile shots or group shots, far fewer systems are capable of handling multiple faces. This will change over time—we’re already seeing an uptick in still photo systems identifying multiple people in group shots—but for now, don’t be afraid to manually add metadata for key points in a video. More detail about the contents of a video often equals more value for the video itself.

6. For premium content, especially theatrical or television content, consider buying access to existing metadata. That is, when you are allowed to. In an article last year, we mentioned that granular metadata is becoming so valuable that studios will sell access to some high-level metadata content for sites such as IMDb while holding on to granular metadata content as competition-sensitive.

7. Go to the source. When it comes to online video platforms, YouTube has a different set of search requirements than iTunes, which has a different set of requirements than Vimeo, or even your platform’s application store.

To understand what metadata is needed and what pitfalls to avoid, go to the source—the blog posts or FAQs for a particular content network. As an example, YouTube has a very distinct set of search fields. Known as the second-biggest search engine after parent Google’s massive search engine, YouTube’s product managers recommend a few key points in a very informative blog post titled “Tips for Partners: Words, Words, Words!!” (http://bit.ly/vSUZI1).

One of YouTube’s main dislikes is spam. If the YouTube algorithm finds repetitive words within a video description, title, or tag list—or even across a number of videos in your channel—the search success of that particular content will decline.

“Don’t repeat words in your description or title, this will not help you,” the blog post warns. “Rather use different words and variations that users might search on to find your video.”

A number of video formats (containers) on the market support in-file metadata including timed-text options such as subtitles. While the words spoken in a video are prime candidates for in-file metadata, there is some content that might be better held outside the file and referenced.

8. Use your desktop video player to find out which metadata already exists within the file. While a few pieces of metadata can be gleaned from the detailed list view in Windows XP, Vista, or 7 (or “Get Info” on Mac OS X), a video player is the best way to find out what metadata is already available inside the video file (called in-file metadata).

For users of Mac OS X, for instance, QuickTime has a Movie Inspector (Choose Window > Show Inspector or press Command-I) that shows a number of pieces of metadata:

- Name of file

- Album name

- Copyright (if any)

- Source (file location or URL)

- Format (codec, bitrate, number of channels MHz rate (for audio)

For even more detail, a program such as Metadata Hootenanny (why? we have no idea) distributed by 3ivx Technologies Pty. Ltd. can allow additional metadata—including URLs, such as cover art or IMDb links—to be added to the file. Hootenanny can also import chapter tags and even metadata parsed by a particular language track. As the basis for the MP4 file container format, QuickTime has the ability to handle multiple synchronized audio tracks for either surround sound or multiple languages. In addition, QuickTime can handle interactive DVD-like menus natively within its file structure.

Other players, such as VLC, can handle additional metadata, including timed-text options such as subtitles, which can be toggled on and off. Other open source options include EasyTAG, which was originally designed for adding ID3 tags to MP3 files, but it also works for MP4 files, and even the stalwart FFmpeg on which many open source transcoding applications are based.

One word of caution with open source metadata tools, especially builds of popular programs such as FFmpeg. These are forked or split among developers who work on various pieces, so your mileage may vary with a particular build of FFmpeg.

In fact, a search of recent open source metadata tools reveals that “FFmpeg’s metadata handling is in flux,” according to a recent blog post on Arch Linux. “If one builds an unpatched version from subversion right now, the metadata doesn’t line up properly in some cases”.

9. DASH to the rescue? The buzz around MPEG DASH (MPEG’s Dynamic Adaptive Streaming via HTTP) and its recent ratification has hit peak level, now that the initial standard has been ratified. The hard part is yet to come, however, as the adoption of a common file format and common encryption schemes will quickly be followed by a number of DASH-compliant players. At least that’s the hope, since guaranteeing interoperability between potentially DASH-compliant players may be the biggest challenge of all. Expect to see DASH players from QUALCOMM, Inc., Ericsson, and even Microsoft and Adobe.

After every DASH player has encoded content fragments consistently play back, the next step is to make sure that metadata lines up among the players. It would seem easy, almost inherent to the MPEG DASH specification, since DASH is nothing more than a consistent XML-based way to reference the various synchronized MP4 fragments. Yet the challenge will remain at least for several months after the first DASH-compliant players hit the market. This is one of the key testing areas that Transitions, Inc.—a consulting firm I co-founded to provide interoperability testing—will focus on during the first half of 2012.

10. Keep it fresh. Streaming Media contributing editor Jose Castillo uses the sig line “keep it spicy” in many of his emails. For video metadata, the corollary is to keep it fresh.

In other words, don’t just tag a video, upload it, and forget about it.

“If you have a popular video that continues to get views over time, update your tags regularly to take advantage of new searches,” the “Tips for Partners” YouTube blog post says, noting that “Online search behavior is always changing, so your tags should change along with it.”

Think about your video metadata for online video platforms in much the same way you would a blog post: Particular labels or tags are attractive when a blog post is newly minted, especially when the post is timely to current events. Yet the tags that have legs for referential or how-to, text-based content may differ a month or two after the initial blog post.

The same is true for long tail video content, with the added emphasis that tags and labels become even more important for videos without transcripts or captions, since the tags, title, and description are the only current ways to differentiate a popular video from a less popular one.

Finally, it’s worth noting a few metadata players in the marketplace. Companies including Anyclip, Digitalsmiths Corp., Thought Equity Motion, and Pictron, Inc. offer a variety of metadata extraction suites. Some are offered as products, while others are offered as video discovery services. In addition, basic metadata extraction is available within Adobe nonlinear editing software and Apple’s iMovie and Final Cut Pro X. A number of additional products, under the heading of “computer vision systems,” will arrive on the market in 2012.

Related Articles

The online video industry is on the verge of offering useful new features powered by metadata. But when will the promise become a reality?

16 Aug 2012

Video indexing and metadata extraction services help viewers find the clips they want to see. Here are key questions to ask before making a purchase.

27 Feb 2012