Vindral: Reliable & Scalable Ultra Low Latency Video Playback

At a presentation I gave at Streaming Media East this past May, I reviewed the low latency options currently available on the market for live streaming needs—mostly from a perspective of various technology stacks available for content providers and vendors that provide those stacks. From lowest latency to highest, those options include WebRTC, WebSocket, variations of CMAF, HLS (Apple’s HTTP Live Streaming approach) and DASH.

For any stakeholder with live streaming business requirements, the myriad of options can be overwhelming and easily misunderstood. Picking the wrong solution or approach would likely lead to inconsistent results for the customer, as well as expensive reworking of the tech stacks down the road. Moreover, many of these tech stacks undergo massive changes from one release to the next, with mixed implementations from browser vendors.

Enter RealSprint’s newest low latency streaming CDN solution: Vindral. Vindral uses WebSockets technology as the foundation for its sub one second delivery of live content. In this article, I explain why WebSockets technology is likely the best choice for your live streaming and why Vindral is well positioned as a premier CDN in the low latency market.

Note: For purposes of this discussion, I will be focusing on one-to-many business requirements, where an origin stream needs to be shared with many subscribers in real-time, regionally or globally.

A Primer for Streaming Technologies

Let’s compare the current low-latency stacks to get a better sense of the technical requirements involved with each stack. Understanding the differences in deployment will help you determine which streaming transports should be used to provide a premium viewing experience for your customers.

HTTP Streaming: CMAF, HLS and DASH Variations

One of the holy grails of streaming delivery is to be able to use existing HTTP scaling architecture to deliver high volume and reliable service. In short, if you can scale general web content with CDNs for HTTP traffic, you should be able to use the same scaling mechanisms for streaming video. For latency in streaming playback, several factors can come into play. Round trip time (RTT) for HTTP packets over TCP is going to add some latency to the stream. HTTP caching is also a primary component of CDN architectures, and caching by definition requires content to be written and stored–also adding to latency. More importantly, CMAF, HLS, and DASH are all manifest-driven formats whose performance is largely dictated by the video player code running in a web application or device app. The video player has to constantly fetch the “table of contents” of the stream—which is typically more than one level deep–before it can start loading and playing segments of the referenced stream. Segment duration is also a key factor in determining latency with these formats. As streams in this category are served over HTTP or HTTPS protocols on standard TCP ports, network firewalls don’t usually reject this traffic. Therefore, you have some reassurance that playback issues of content will be of little concern.

Within the last few years, Low Latency HLS, or LL-HLS, has been in development by Apple and other major vendors including Vindral. The core improvement of LL-HLS over typical HTTP streaming formats is a further reduction in chunk or segment sizes–a video player can more predictably fetch the most current parts of a larger chunk or segment without waiting for other parts to preload. While the specification is an improvement over traditional HLS deployment, it’s not as easy to skip segments or frames to maintain synchronization with a real-time stream. In early testing I did with LL-HLS, live streams would often drift out of sync from real-time as playback time increased. Most off-the-shelf web video players need a specific LL-HLS implementation in order to work. Vindral is actively working on their own LL-HLS deployment to augment their existing WebSockets delivery system. Having multiple transport technologies available ensures successful stream playback across a wide range of network environments and operating systems used by target audiences.

WebRTC

As Adobe’s Flash technology and its RTMP streaming playback disappeared from the web and native app realms, a new real-time protocol came about: WebRTC (Real Time Communication). This new protocol promised to add real-time audio and video streaming to web apps using HTML5 standards. WebRTC could work in a variety of delivery scenarios, including P2P (Peer to Peer) where clients send packets directly to each other, SFU (Selective Forwarding Unit) where servers broker packets between clients, and MCU (Multipoint Control Unit) where servers merge multiple audio and/or video upstream packets from clients to create a single downstream for each connected client. After a WebRTC client connects to a server and negotiates its path to content, the audio and video are delivered over TCP and/or UDP ports, ideally the latter—particularly when it comes to sub one second latency. WebRTC packets received by the client have minimal buffering, and with UDP delivery, packets can be dropped much more easily to keep up with real-time playback.

As one of WebRTC’s original intentions was to enable real-time audio, video, and data between multiple clients sharing data with each other (e.g. audio/video conferencing), the emphasis was on keeping interactions as real-time as possible, with negligible latency. As a result, the quality of the video stream may fluctuate to maintain real-time playback—control of playback quality may not be in the hands of the player tech but underlying native browser APIs. Video playback might not be able to maintain high bitrate, high quality, and high frame rate over WebRTC and achieve ultra low latency at the same time.

WebSockets

How does WebSockets fit into a streaming solution? In short, WebSockets deliver nearly any type of binary “data”, like audio or video packets, over a real-time bi-directional socket between a client such as a web app running in a browser and a server. The client can receive data as well as send information back to the server. Many types of applications can be built on top of WebSockets technology, from real-time chats to low-latency streaming video and audio.

More importantly, WebSockets have been around for a long time! The specification was first introduced in 2008, and by 2011, every major browser vendor supported both insecure WebSocket (ws://) and secure WebSocket (wss://) protocols. The latter protocol uses standard TLS encryption just like HTTPS connections do in the web browser. A vendor building services on top of secure WebSocket connections can have the confidence of a proven reliable technology based on several years of implementation by browsers. This simple point can’t be said of other technologies used to stream low-latency content.

Playback and multi-bitrate control of audio and video content over WebSockets is not provided by native media APIs in the web browser. Most WebSockets video playback is specific to a customized player codebase that relies on HTML5 Media Source Extensions (MSE). Every WebSocket streaming vendor has a proprietary system for delivering content.

The Business Case for WebSocket Technology

For low-latency streaming that isn’t based on HTTP or HTTPS like HLS or DASH, the two biggest contenders are WebRTC and WebSockets. As discussed in the previous section, WebRTC and WebSockets establish bi-directional communication between the client and server, enabling much more finesse and control over sent and received data, such as dropped frames, round trip time (RTT), and much more.

So how do you choose between WebRTC over WebSockets? Clearly, they are the two lowest latency options, but which is “better”? While each use case and business requirement can be unique, here are five reasons for choosing a WebSockets streaming solution over a WebRTC one:

- Scalability: Typically, WebSockets server technology is easier to scale across the cloud. Enabling more WebSocket edges to WebSocket origins is much less complex from a networking and cross-regional perspective than WebRTC is.

- Firewalls: WebSockets use the same ports as other web traffic: usually TCP port 80 for insecure traffic, and TCP port 443 for secure traffic. Most firewalls allowing web traffic will also allow WebSocket traffic, making it much easier to get your content to ever-increasing locked down networks. WebRTC, on the other hand, can have difficulty getting across the Internet and into a LAN environment, particularly if the traffic is traversing mobile carrier networks with asymmetrical NAT (Natural Address Translation). WebRTC needs to use yet another layer of transport, TURN, or Traversal Using Relays around NAT, to do exactly that: get around the problem of NAT environments.

- Cost: Services using WebSockets will typically incur lower costs on the cloud to run and maintain over other competing real-time technologies such as WebRTC. This lower cost usually equates to lower costs of WebSocket streaming vendors compared to WebRTC vendors.

- Codec Consistency: WebSockets can use specific video and audio codecs that are consistent between client applications running in a web browser, unlike WebRTC which may fallback on specific codecs favored by the browser vendor.

- Developer Learning Curve: SDKs for one-to-many WebSocket services are much easier to implement and control, as there are less variables to consider over WebRTC. This ease of use means faster development time, faster testing, and faster deployment.

Vindral’s core streaming solution is based on WebSockets technology, with forthcoming support for LL-HLS. In the next section you’ll learn why Vindral’s implementation is appealing for one-to-many live streaming deployments.

Why is Vindral Special?

After you are convinced that WebSockets technology is more likely going to satisfy your business requirements for an ultra low latency solution, your next step is to determine your path to its implementation. The classic “build or buy” dilemma applies just as equally to ultra low latency streaming as it does with the rest of the product and software development process.

It’s beyond the scope of this article to fully scope the components of a low latency WebSockets streaming cloud architecture and solution, but at minimum you’d need to develop the following:

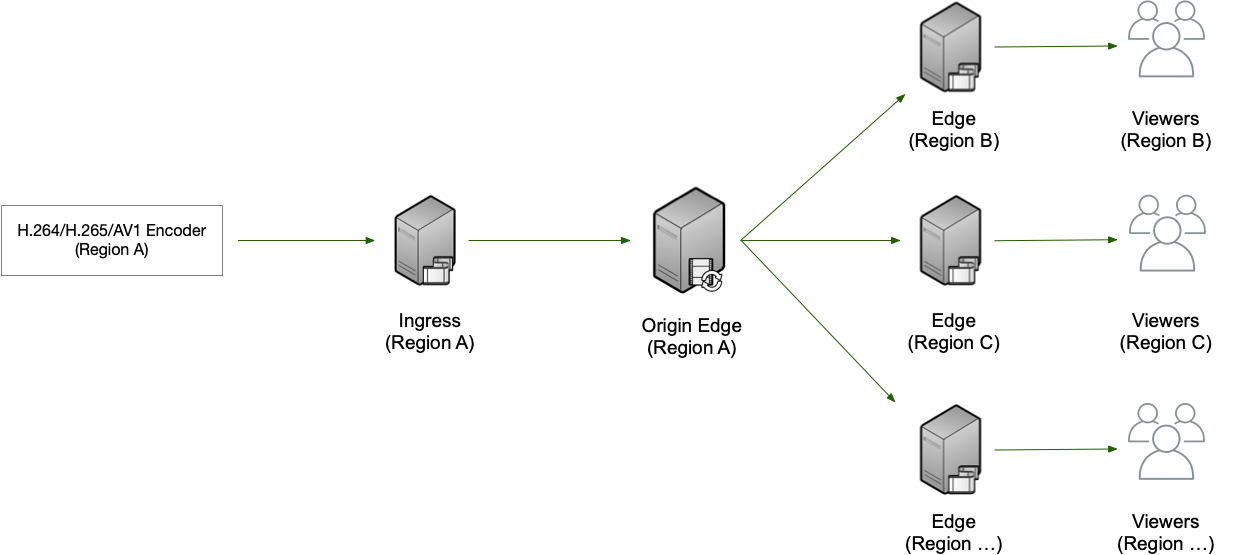

- Ingest architecture: How are you going to ingest from your publishing points such as H.264 streaming appliances or applications? In which regions will your ingests need to exist?

- Edge-origin architecture: How are you going to scale in real time the necessary edge servers from a given origin source?

- Video playback: What metrics will you use to determine frame drops, video quality switching, and synchronization of playback across viewers? How will those metrics be implemented in a web application or native application? Are your software developers savvy with media APIs and event handling? How will you manage audio and video buffering?

- Codec specifications: Which codecs will you support for ingest and playback? How will properties of selected codecs be controlled to provide a superior viewing experience?

- Transcoding implementation: Most streaming solutions need to consider adaptive bitrate playback in order for content to play smoothly and uninterrupted across a variety of network conditions. How will transcoding resources be created and managed? Do you build your own custom encoder solution or rely on another service?

These items are just the starting point for considering your own custom built streaming solution. When you reach the conclusion that seeking an all-in-one streaming CDN based on WebSockets tech is better than the alternative of building your own, you’re now comparing vendors offering an ultra latency solution.

Figure 1: Ingress/egress for live streaming cloud architecture

In my own independent testing of Vindral against competing vendors, I found Vindral to demonstrate superior performance with playback resiliency and playback responsiveness.

Playback Resiliency

The first “wow” factor I experienced with Vindral was how well it performed under low or starved bandwidth conditions. Using FFmpeg as an RTMP encoder generating a 1080p 30fps 8Mbps stream into Vindral and other competitors, I found that the player logic with Vindral’s WebSocket platform consistently provided a smoother playback experience with connection speeds lower than the source bitrate. Using NetBalancer as a bandwidth throttling tool to simulate 4G and 5G connection conditions, Vindral playback and performance were consistent across devices.

While I only tested 1080p playback, higher resolutions like 4K or 8K should always be tested and proven before committing to specific deployments, as these resolutions will likely fit into your long term roadmap. On top of already supporting 4K and HDR playout, at this year’s IBC showcase, Vindral’s team demonstrated a tech preview of 8K streams working just as exceptionally as other content.

With constricted network conditions such as those that might exist over a VPN or privacy guard network software, Vindral again performed consistently across devices and browsers. Some WebRTC implementations that were tested could not establish connection or playback of the test stream, likely due to lack of a TURN fallback that would work over more strict network rules.

Playback Responsiveness

Vindral’s embedded player also performed well in other performance categories, including:

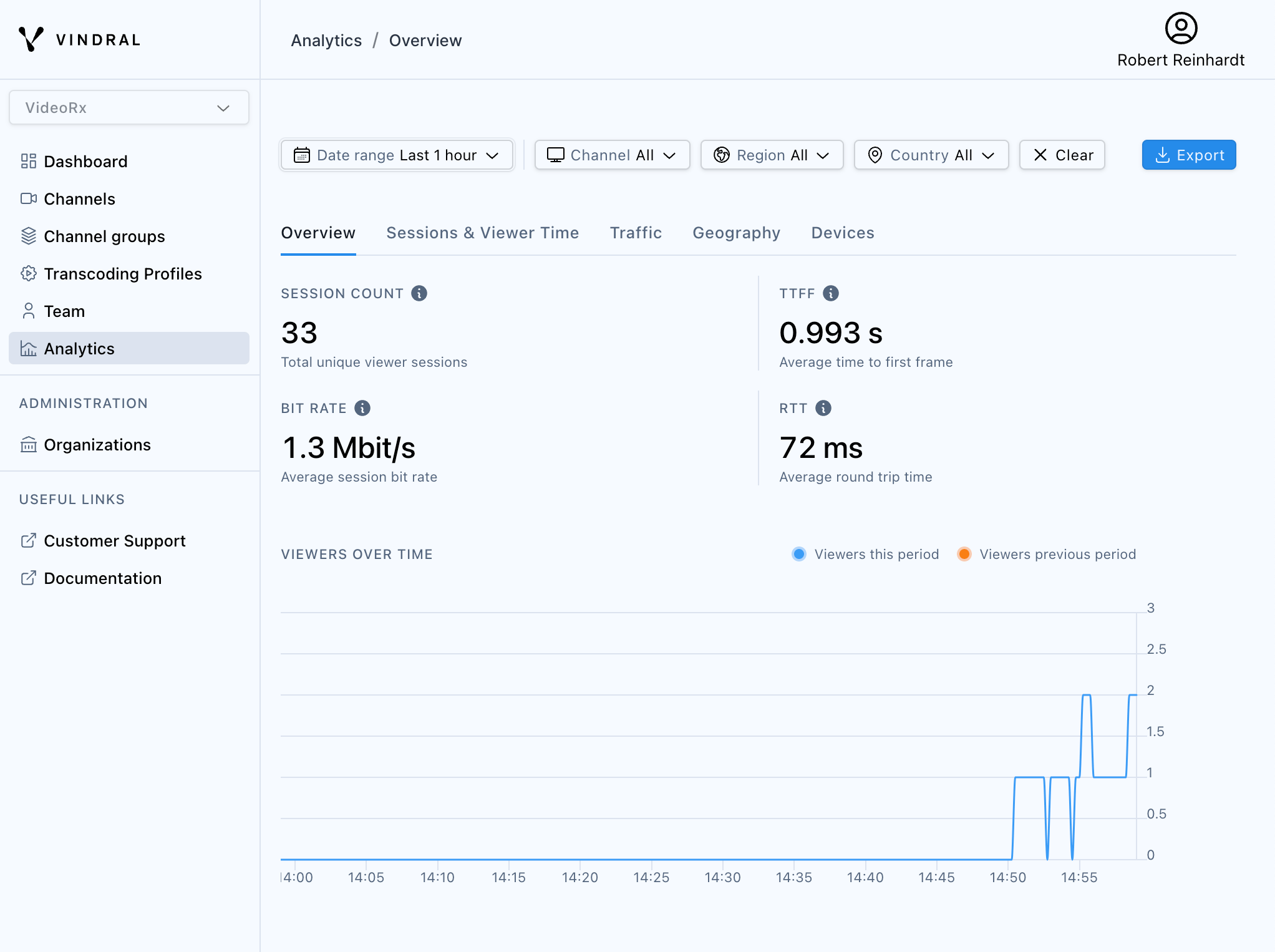

- Time to first frame: Player initialization and start times were exceptionally fast, usually between 300 and 600 milliseconds, and as low as 200 ms. Customers can view this statistic among other information in the Analytics section of the Vindral portal (see Figure 2).

- Buffer and sync control: Minimum and maximum buffer times for playback could be adjusted easily with variables passed to the embedded player, and buffer times of 1 second played smoothly at full frame rate and at or below 100 ms of variation between test devices.

- Adaptive bitrates: Vindral’s ingest service transcodes the source stream into renditions specified in the Transcoding Profiles section of your Vindral portal account. Manual switches during playback were seamless, and simulated bandwidth throttling didn’t interrupt playback during quality switches.

Figure 2: Analytics Overview of Vindral session

Cost

As part of my review of Vindral, I presented past client engagements and deployment strategies to the Vindral team. More often than not, Vindral’s cost for the same target audiences and stream qualities was 50% less than current costs from other vendors. Vindral also offers a flexible business model and custom pricing specific to your deployment needs. If you’re using next gen codecs such as AV1, you’ll benefit from lower traffic volume as well.

Next Steps

So what’s your next move? When you’re ready to switch from your current streaming deployment to Vindral, what do you need to consider? I advise any of my clients to evaluate a new technology or service as objectively as possible:

- Implement a rapid Proof of Concept (PoC): Build a test harness to compare player implementations for your streaming content, so that you can compare real-time sync to the source.

- Determine your maximum source stream count: How many incoming sessions do you need to support independently and concurrently? Identify which geographic regions will be needed to support your source streams. Vendor costs can vary greatly with ingest fees.

- Monitor performance: Establish procedures to measure the metrics that matter to you for the viewing experience, and track metrics as needed.

In summary, the current ecosystem for low latency live streaming involves several different technologies to accomplish the same result: consistency in playback across a wide range of devices and resiliency across adverse network conditions. Make sure you take the time to properly evaluate your current and future business requirements before implementing a new streaming solution.

vindral.com

This article is Sponsored Content

Related Articles

In this wide-ranging interview from Streaming Media 2025, Vindral CEO Daniel Alinder and Streaming Media Contributing Editor Timothy Fore-Siglin discuss Vindral's hybrid live streaming solution, the versatility of the emerging Media Over QUIC (MoQ) protocol, and the benefits of tunable, configurable latency for high-interactivity live streams at scale.

13 Nov 2025

Companies and Suppliers Mentioned