AI and Streaming Media

Let's face it: Until ChatGPT launched, fully formed and instantly useful machine learning and artificial intelligence were buzzwords we almost snickered at, as if their inclusion on a spec sheet was more for marketing purposes than to designate any new or even enhanced functionality. While certainly true for some products and services, the seeds of truly useful streaming-related AI/ML functionality were planted in 2016 or even earlier and are now bearing spectacular fruit throughout the streaming encoding, delivery, playback, and monetization ecosystems.

This article explores the current state of AI in these ecosystems. By understanding the developments and considering key questions when evaluating AI-powered solutions, streaming professionals can make informed decisions about incorporating AI into their video processing pipelines and prepare for the future of AI-driven video technologies.

As a caveat, the companies and products mentioned here are those that came to my attention during my research; the list is certainly not exhaustive by any means. AI in our ecosystem is a topic I’ll be watching, so if you have a product or service that you feel I should have discussed, contact me at janozer [at] gmail.com to initiate a conversation.

Let’s start at close to the beginning with video pre-processing.

Pre-Processing and Video Enhancement

AI-powered pre-processing tools are making significant strides in improving video quality and reducing bandwidth requirements. Two notable companies in this space are Digital Harmonic and VisualOn.

Digital Harmonic’s Keyframe (see Figure 1) is a pre-processor that offers both quality improvement and bandwidth reduction. The product existed before AI integration, but AI has enhanced its performance in both of these modes. Keyframe claims to achieve up to an 80% bitrate reduction with no loss in quality, measured by both peak signal-to-noise ratio and mean opinion score (MOS). It can also improve video quality beyond the original source, although this raises questions about preserving creative intent.

Figure 1. Digital Harmonic’s Keyframe pre-processor makes some bold claims about bitrate savings

Keyframe operates as a “bump in the wire” GPU-based system, sitting in the video chain before the encoder. It is codec-agnostic, making it versatile for various encoding setups. However, its throughput capabilities need to be considered when pairing with high-performance encoding systems.

VisualOn’s Optimizer takes a different approach, integrating directly with the encoder via an API. It adjusts bitrate and other parameters on a frame-by-frame basis, adapting to content complexity. VisualOn claims up to 70% bitrate reduction without quality loss, measured by VMAF (Video Multimethod Assessment Fusion). Interestingly, despite reducing the bitrate, VisualOn argues that its solution can increase scalability and throughput by reducing the encoder’s workload.

Both solutions demonstrate the potential of using AI in pre-processing to significantly improve compression efficiency and video quality. However, users should carefully consider the implications for creative intent and conduct thorough testing to validate claims in their specific use cases.

AI in Video Encoding

Several companies are using AI to improve the compression efficiency of existing codecs, which can be instantly deployed on existing players. Another class of products, discussed next, are using AI to create new codecs that will need their own set of compatible devices for playback.

Harmonic’s EyeQ is a prime example of the first use case. EyeQ, which existed before AI implementation, now boasts improved compression efficiency thanks to its AI component. Harmonic claims a 50% greater efficiency than open source alternatives, although the company doesn’t specify which codecs or metrics its comparing against. The most compelling evidence of EyeQ’s effectiveness is its adoption by more than 100 customers. EyeQ is available as both an appliance and a cloud service.

Visionular, another codec company, offers AI-enhanced implementations of H.264, HEVC, and AV1 codecs (see Figure 2). Its AI integration aims to improve compression efficiency, claiming up to a 50% bitrate reduction compared to open source implementations of the same codecs. Visionular’s president, Zoe Liu, has been studying AI applications in video compression since at least 2021, highlighting the company’s long-term commitment to this technology.

Figure 2. Visionular improves H.264, HEVC, and AV1 with AI.

Media Excel’s DIVA (Dynamic Intelligent Video Adaptive) technology uses AI to analyze and optimize encoding settings in real time, achieving superior video quality and compression efficiency across various codecs. By training on tens of thousands of hours of HEVC content, DIVA has demonstrated at least 20% efficiency improvements, with ongoing efforts to achieve similar gains for H.264 and VVC.

Codec Market takes a different approach, offering an integrated cloud platform that includes an encoder, player, content management system, and CDN. Its AI implementation uses an advanced open source version of VMAF during the encoding process. This allows for realtime content-adaptive encoding, targeting consistent quality levels around a user-selectable VMAF score. Codec Market claims to be 30% more efficient than open source alternatives.

These companies are applying AI to improve the compression efficiency of their encoding. In contrast, Facebook is using AI to determine how to prioritize encoding quality for any particular uploaded file. Specifically, Facebook employs machine learning models to predict watch time and optimize encoding strategies. These models then prioritize videos based on expected watch time, selecting the best encoding settings to maximize efficiency and quality. For example, Facebook uses metrics likes MVHQ (minutes of video at high quality per GB) to compare compression efficiency across different encoding families (H.264, VP9, etc.).

Beyond these advancements, we’re also seeing generative AI work its way into encoder operation. For instance, Telestream’s Vantage Workflow Designer allows users to create encoding workflows using plain-English commands. While still in its early stages, this technology hints at a future in which creating transcoding workflows may not require deep compression expertise, just a cogent prompt that details the encoding source and delivery targets.

I know that companies like Brightcove and many others have made substantial strides with AI/ML in encoding as well and look forward to experimenting with their technologies down the line.

AI-Based Codecs

The previous class of AI implementations sought to enhance existing codecs that were compatible with current players. In contrast, AI-based codecs leverage artificial intelligence to create completely new codecs that necessitate specialized players.

One business at the forefront of this technology is Deep Render, which claims to be the world’s only company focused solely on AIbased codecs (see Figure 3). It is developing an AI-based codec from scratch, boldly claiming it will achieve 45% greater efficiency than VVC, with a release scheduled for 2025. For playback, Deep Render will leverage the fast-growing installed base of neural processing units, or NPUs. These are generalpurpose ML-based processing devices that started appearing on Apple iPhones in 2017 and are included in all subsequent products with newer chipsets.

Figure 3. Deep Render is developing a complete AI-based codec.

Most existing codecs require codec-specific chips or codec-specific gates in a GPU or CPU, which naturally slows technology adoption. For instance, 4 years after its finalization, VVC hardware playback is still unavailable on mobile phones and only found on a few smart TVs and OTT dongles. By leveraging general-purpose ML hardware that started shipping more than 8 years before its first codec’s release, Deep Render hopes to accelerate the adoption phase of its technology among mobile phones and other early adopters of NPU technology.

Although it is a still-image-based technology, JPEG AI uses ML to deliver superior compression efficiency and a compact format optimized for both human visualization and computer vision tasks. JPEG AI is designed to support a wide range of applications, including cloud storage and autonomous vehicles. It is not backward compatible with existing JPEG standards, although it can also leverage NPUs for accelerated playback performance.

One company helping to accelerate the design of AI-based codecs is InterDigital, with its CompressAI toolkit. CompressAI is an open source PyTorch library and evaluation platform for end-to-end compression research. It provides codec developers with tools to create entirely new AI-based codecs or add AI components to existing ones. CompressAI includes pre-trained models and allows for apples-to-apples comparisons with state-ofthe-art methods, including traditional video compression standards and learned methods. Similarly, Facebook has developed NeuralCompression, an open source repository that focuses on neural network-based data compression, offering tools for both image and video compression. This project includes models for entropy coding, image warping, and rate-distortion evaluation, contributing to advancements in efficient data compression methods. The Moving Picture, Audio and Data Coding by Artificial Intelligence (MPAI) organization is

also working on AI-enhanced video coding. Its MPAI-EVC project aims to substantially enhance the performance of traditional video codecs by improving or replacing traditional tools with AI-based ones, aiming for at least a 25% boost in performance. Since HEVC, it’s been tough to get excited over new video codecs. None of the three MPEG codecs launched in 2020 have made significant strides toward mass deployment, although LCEVC always appears to be on the verge of a major rollout. This latency relates to multiple factors, including how long it takes for hardware decoders to be deployed and a primary focus on video playback for humans.

AI-based codecs may break this logjam by using NPUs rather than dedicated silicon. In addition, as video is increasingly created for machine playback in autonomous cars, automated factories, security, traffic, and a host of other applications, AI-based codecs can be handcrafted for these use cases. Both factors may allow AI codecs to become relevant much more quickly than traditional codecs.

Super Resolution and Upscaling

Super resolution and upscaling technologies have gained traction with the advent of AI, particularly for enhancing legacy content. These technologies are valuable for media companies with extensive libraries of older movies or TV shows that need upgrading for modern 1080p or 4K displays.

One company offering this capability is Bitmovin, which has been working on AI-powered super resolution since at least 2020, combining both proprietary and open source AI implementations in its approach. A visual comparison between Bitmovin’s AI-powered super resolution and the standard bicubic scaling method in FFmpeg shows differences in sharpness and overall picture quality. This type of enhancement can potentially improve viewer experience, especially for content that wasn’t originally produced in high definition. Other companies working on AI-powered upscaling include Topaz Labs with its Video AI enhancer and NVIDIA with its DLSS (Deep Learning Super Sampling) technology.

AI for Adaptive Bitrate Streaming Optimization

Several technologies use AI to control bitrate switching during adaptive bitrate (ABR) playback to enhance the viewer’s experience. Two notable approaches are Amazon’s SODA (Smoothness Optimized Dynamic Adaptive) controller and Bitmovin’s WISH ABR. Both aim to optimize video streaming by dynamically choosing which pre-encoded video segments to download based on real-time network conditions, but they employ different methods and offer unique benefits. Most importantly, WISH is available for third-party use; so far, SODA isn’t. As an Amazon white paper describes, SODA leverages algorithms based on smoothed online convex optimization (SOCO) to provide theoretical guarantees for improving QoE. According to the paper, SODA’s deployment in Amazon Prime Video has demonstrated significant improvements, reducing bitrate switching by up to 88.8% and increasing average stream viewing duration.

WISH aims to deliver a smoother viewing experience by optimizing the choice of video segments to download. It focuses on weighted decision making to balance video quality, buffering, and bitrate switching seamlessly.

AI in Quality Assessment

Machine learning made its debut in quality measurement with the VMAF metric back in 2016. Netflix has steadily advanced VMAF since then, adding 4K and phone models, a noenhancement gain (NEG) mode to counteract VMAF hacking, and the Contrast-Aware Multiscale Banding Index (CAMBI). One upgrade I’d love to see, sooner than later, is an HDR-capable open source version.

IMAX’s ViewerScore is another AI-enhanced quality measure that’s been productized to expand functionality. This technology, which evolved from IMAX’s acquisition of SSIMWAVE, is available in two products: StreamAware and StreamSmart. StreamAware provides realtime quality monitoring and reporting, while StreamSmart dynamically adjusts encoder settings to optimize bandwidth usage. The integration of AI has improved the correlation between the ViewerScore and human perception from 90% to 94%, which is remarkably high given the subjective nature of video quality assessment. IMAX claims that StreamSmart can reduce bitrate by 15% or more while maintaining perceived quality. The ViewerScore uses a 0 –100 scale, similar to VMAF, but offers additional features such as HDR support, device-specific assessments, and the ability to compare files with different frame rates.

Captioning and Accessibility

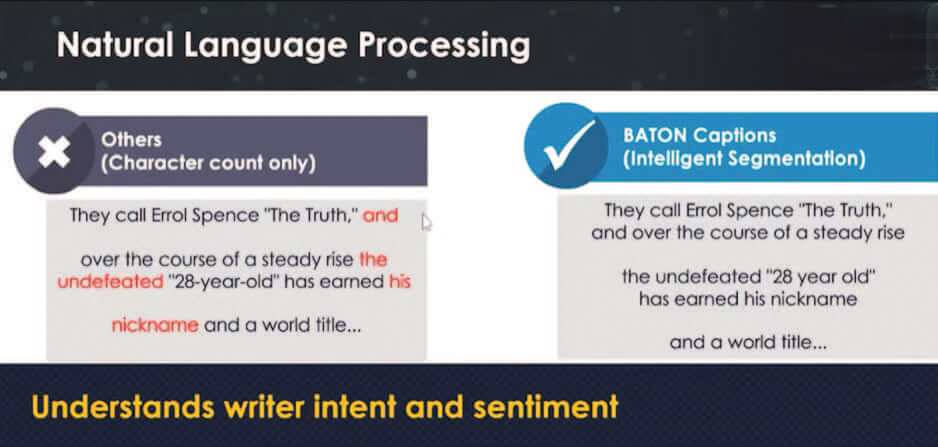

AI is revolutionizing captioning and accessibility in video streaming, with both open source and proprietary solutions making significant strides. One notable example is Interra Systems’ BATON Captions, which combines open source and homegrown AI technologies to enhance captioning capabilities (see Figure 4).

Figure 4. BATON Captions uses natural language processing to deliver better captions.

BATON Captions leverages natural language processing (NLP) to improve the readability and comprehension of captions. The AI breaks down captions into more natural segments, making them easier for viewers to understand. This subtle but impactful improvement demonstrates how AI can enhance the accessibility of video content beyond mere transcription.

In addition, many companies are leveraging Whisper, OpenAI’s open source speechto-text technology, to add captions to their products or services. For instance, NETINT and nanocosmos offer Whisper-based transcription with additional features tailored to their platforms. These initiatives enable all live streamers to caption their productions, which was once too expensive for all but the highestprofile productions.

Content Analysis and User Experience

AI is significantly enhancing content analysis and user experience in video streaming, enabling more personalized and engaging viewer interactions. One company in this space is Media Distillery, which uses AI to improve content segmentation and topic detection. Media Distillery’s technology can automatically segment long-form content into meaningful chapters, making it easier for viewers to navigate and find the segments in which they are interested. For example, in a live sports broadcast, the AI can identify and label different segments such as cycling, hockey, or a Grand Prix, allowing viewers to skip to their preferred sections quickly. This improves the overall viewing experience by providing more control and customization. IdeaNova’s AI Scene Detection automatically identifies distinct scenes in video content, enabling more efficient navigation. This lets users select specific scenes rather than relying on time-based navigation, with potential future applications in content filtering and scene transition improvements.

AI is also widely used for content recommendation. By analyzing viewing patterns and preferences, AI can suggest relevant content to users, enhancing engagement and retention. Companies like Netflix and Amazon Prime Video have been leveraging AI for personalized recommendations, significantly improving user satisfaction and keeping viewers glued to their respective platforms.

Analytics

Most traditional analytics packages are high on data and low on actionable insights. Streaming services are remedying this by adding AI to their analytics packages. One example is Bitmovin Analytics, which provides session tracking and analysis tools to help identify and resolve issues such as bitrate problems, buffering, and quality degradation. The system offers actionable recommendations based on machine learning, providing context to the data presented.

AI in Programmatic Advertising and Ad Tech

Programmatic advertising, which uses AI and machine learning to automate the buying and selling of ad inventory in real time, has become a cornerstone of digital advertising. AI plays a crucial role in various aspects of programmatic advertising, enhancing efficiency, targeting, and performance.

One key area is real-time bidding (RTB), in which AI algorithms analyze vast amounts of data to make split-second decisions on ad placements. Companies like The Trade Desk and MediaMath use machine learning to optimize bidding strategies, considering factors such as user behavior, context, and historical performance.

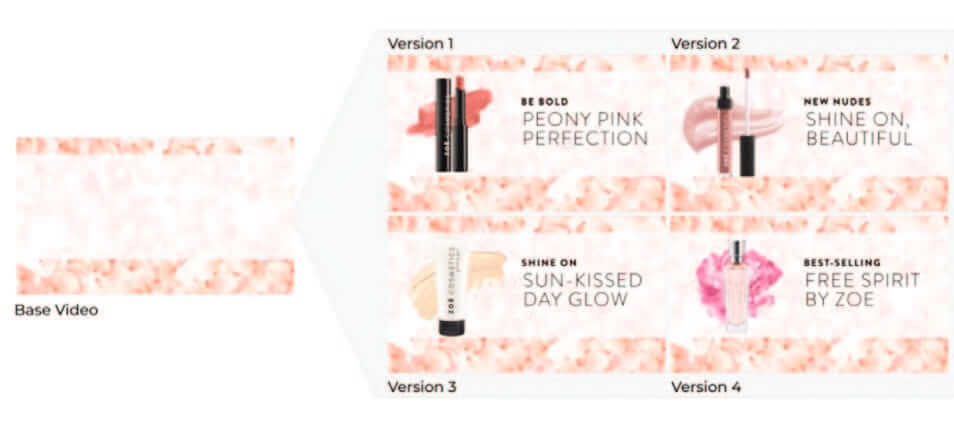

AI is also enhancing creative optimization in programmatic advertising. Platforms like Celtra and Flashtalking (see Figure 5) use machine learning to dynamically adjust ad creatives based on user data and performance metrics, improving engagement and conversion rates.

Figure 5. Flashtalking enables dynamic ad creation customized according to your user data.

In addition, Moloco’s AI-based platform helps smaller streaming companies optimize ad delivery by leveraging machine learning to ensure ad diversity and accuracy, enhancing viewer engagement and retention. By integrating first-party data, Moloco tailors ads to individual preferences, as demonstrated by its work with JioCinema during the Indian Premier League cricket season, when it managed thousands of ad campaigns across different languages and regions, significantly improving ad relevance and maximizing advertising revenue.

Where humans are involved, companies like Operative are leveraging AI to streamline workflows and enhance decision making. Operative’s AI assistant, Adeline, demonstrates how generative AI can automate proposal generation and speed up sales tasks. For example, a sales representative can voice-message a request to Adeline, specifying details like the advertiser, budget, target CPM, and campaign dates. The AI then generates a complete proposal, including appropriate inventory selections and pricing.

Conclusion

We’ve seen how AI is transforming various aspects of the industry, such as encoding, quality assessment, ad tech, and monetization. Streaming Media has offered some tips upfront about how to evaluate AI’s contribution to various technologies. To these, I wanted to add some considerations provided by Rajan Narayanan, CEO of Media Excel. During a recent conversation, he discussed five points Media Excel thought about before adding AI to the product. Three are operational: assessing the cost of computational overhead, the effect on total cost of ownership, and the impact on latency.

The last two points are strategic and provide good guidance whether you’re deciding to add functions that can fundamentally change the appearance of content.

The final point is system-level operational risk, described by Narayanan as follows: “Anytime you consider implementing AI at a system level and managing the different components at the system level, you should ask, ‘Is the system completely predictable? And are there going to be failure cases that could be potentially catastrophic?’”

This is a reminder that, while humans are certainly not infallible, anytime you seek to replace them with a largely autonomous system, you better be sure that the vendor has thought through the implications of the most critical and potential points of failure. This will be a consideration managers will be making with increasing frequency over the coming years.

Otherwise, as I emphasized at the beginning of this article, it’s crucial to evaluate the whole product or service, not just its AI components, to ensure it meets your required ROI or provides measurable benefits. As AI continues to evolve and integrate more deeply into video processing workflows, keeping these considerations in mind will help you make informed decisions and leverage AI’s potential effectively.

Related Articles

Can generative AI (gen AI) rip things apart and then try to glue it all back together with more insight, more intelligence, more efficiency, and more value? It really depends on what the application is. Within the video workflow, some tasks are good matches for using gen AI, and others aren't.

28 Mar 2025

AI-driven dubbing has recently gained attention as major platforms like Amazon Prime Video and YouTube roll out new tools designed to expand their content's global reach. Amazon is testing AI-assisted dubbing on licensed content, while YouTube has introduced auto-dubbing for thousands of channels. Both efforts reflect a growing belief that dubbing can help platforms engage new audiences—but the results so far have been mixed.

19 Mar 2025

One of the first areas where Generative AI and AI/ML have made a visible, demonstrable, "on-air" impact on widely distributed streaming content is what is popularly known as "subbing and dubbing," or program localization through subtitles and dubbing into other languages. Sinclair VP Innovation Lab Rafi Mamalian explains how Sinclair been localizing Tennis Channel content since they began distributing it internationally.

14 Mar 2025

H.267 should be finalized between July and October 2028. If history holds, this means H.267 won't see meaningful deployment until 2034-2036, long after I hang up my keyboard. Here's a brief description of what the standard is designed to deliver and my free and totally unsolicited advice to the committees and technology developers that will create it.

04 Feb 2025

When Netflix streamed the Paul/Tyson fight to a record-breaking 65 million simultaneous viewers, it wasn't all smooth sailing. Many viewers were left frustrated by buffering, freezes, and audio-sync issues. Like many other vendors, Broadpeak, a major player in streaming technology, claimed its multicast ABR (mABR) technology would have "knocked out video freezes" during the broadcast. It's a tantalizing promise, but could mABR realistically have delivered it? To understand, let's look at what mABR is, what's required to make it work, and why its widespread adoption is more complicated than Broadpeak might have you believe.

05 Dec 2024

AI is the buzz term of 2024, and indeed, tech companies have put a lot of resources and commitment into adding or improving AI components in their products and services. The term AI covers a wide swath of technologies, but for this column, I'll focus mainly on generative AI, or services that can generate human-like output based on input that we humans provide.

02 Dec 2024

While VMAF has been a valuable tool for assessing video quality, its limitations highlight the need for complementary metrics or the development of new assessment methods. These new metrics should address VMAF's shortcomings, especially in the context of modern video encoding scenarios that incorporate AI and other advanced techniques. For the best outcomes, a combination of VMAF and other metrics, both full-reference and no-reference, might be necessary to achieve a comprehensive and accurate assessment of video quality in various applications.

05 Nov 2024

Ring Digital's latest FutureOfTV.Live quarterly report included revealing survey data on the state of live captioning in the TV and streaming ecosystem. We'll delve into the questions we asked and the survey's findings here.

28 Oct 2024

Generative AI may prove even more divisive to society than the Industrial Revolution. There are many questions well worth examining regarding gen AI's impact on the streaming industry, the media and entertainment world, and more. In this article, I'm going to focus on a few streaming use cases in the hope of providing a broader perspective on the changes happening in our world now and those we can expect going forward.

27 Sep 2024

In this expansive interview with Simon Crownshaw, Microsoft's worldwide media and entertainment strategy director, we discuss how Microsoft customers are leveraging generative AI in all stages of the streaming workflow and how they're using it in content delivery and to enhance user experiences in a range of use cases. Crownshaw also digs deep into how Microsoft is building asset management architecture and the critical role metadata plays in effective large-language models (LLMs), maximizing the value of available data.

27 Sep 2024

The evolution of AI extends from preproduction planning to postproduction enhancements, offering tools that augment creativity, efficiency, and precision. It is difficult to ignore all of the buzz about AI these days, but here is what streaming pros can expect from this early stage of AI technology.

27 Sep 2024

What would happen if we started to have dynamic paywalls for streaming services? Leveraging user data, machine learning, and generative AI could create offers based on consumption patterns. Some companies are dabbling in this, but now we have the technology to really start developing it.

27 Sep 2024

In this fireside chat at Streaming Media Connect 2024, Media Universe Cartographer Evan Shapiro, spoke with IMAX SVP and GM Vikram Arumilli about IMAX's transition from a traditionally consumer-facing brand known for high-end cinematic experiences to a brand that also focuses on in-home and on-device entertainment.

27 Aug 2024

FreeWheel is ready to support a streaming marathon for this summer's Paris Olympics, enabling programmatic buying for the first time for targeted ad-serving. Here's what this means for FreeWheel, NBCUniversal, advertisers, and premium sports streaming.

22 Jul 2024

A Q&A with Ria Madrid of Wurl - she discusses BrandDiscovery, their new tech that makes it possible for marketers to precisely match CTV ads with the emotion and context of what viewers are watching to create positive attention, using Plutchik's Wheel of Emotions. Partners like Media.Monks are already driving impressive results for their clients through Wurl's solution, which uses scene-level contextual targeting to help advertisers align the emotional sentiment of their campaign creatives with content closest to the ad break.

01 Jul 2024

To promote the understanding of AI codec development, Deep Render's CTO and Co-founder, Arsalan Zafar, has launched an educational resource titled "Foundations of AI-Based Codec Development: An Introductory Course." In a recent interview with Streaming Media Magazine, Zafar provided insights into the course's content, target audience, and expected outcomes.

29 Apr 2024

Incorporating generative AI into our workflows has the potential to impact almost everything in media technologies, and I'll examine several of the possibilities in this article.

04 Oct 2023

Companies and Suppliers Mentioned